Meeting Date & Time

- Next meeting

- 09:00-10:00 PT / 16:00-17:00 UTC

Zoom Meeting Links / Recordings

Meeting: https://zoom.us/j/98931559152?pwd=d0ZwM1JHQ3d5cXRqVTh4NlRHeVJvQT09

Recording: No recording

Attendees

- Wenjing Chu X

- Anita Rao X

- Jacob Yunger

- Neil Thomson

- @Alex Khachaturian X

- Mary Lacity

- Judith Fleenor X

- Steven Milstein

- Savita Farooqui

- Daniel Bachenheimer

Main Goal of this Meeting

This is the AIM TF's #28 meeting.

One of our main goals is to have individual member presentations on what problems/challenges they see in AI & Metaverse related to trust.

Starting in the new year (2023), we plan to start drafting white papers or other types of deliverables of the task force.

Agenda Items and Notes (including all relevant links)

| Time | Agenda Item | Lead | Notes |

| 5 min |

| Chairs |

|

| 10 mins |

| All |

|

| US Copyright Office NoI | All | Planned:

In Meeting: There was minimal discussion on extending existing comments in the response draft, and no one offered additional written contributions beyond the comments in the draft. General consensus is that there was insufficient understanding of the issues and insufficient time (lots of conferences and other commitments) to produce a comprehensive set of comments by the Oct 18 deadline. However, there was agreement that these are important issues to understand, most of which are beyond just the copyright aspects of AI. It is suggested that:

| |

| General Comments on AI, Copyright | All | The following topics/issues came up in discussions:

Details Mary Lacity's experience with ChatGPT, including asking for sources:

Mary Lacity's experience with the Copyright Office: if you want to influence them, then you need to have direct contact with staff within the Copyright Office (vs submissions) Savita Farooqui’s experience (screenshots) is working with organizations (Savita details?); they have found that ChatGPT is not reliable for accessing and interpreting data, which has driven the need for best practices on both providing data to LLM models and on “crafting” of prompts/queries. Steven Milstein - being able to control the processing of data into Vector DBs, including adding/extending metadata, is highly desirable (to control data use/training better). See the screenshot below as an example. The context (e.g., who, what, when, where, why, how) for the data is an example of metadata. It does have an advantage over (non-AI) Google searches in that you have some control over directing the use of data and how to derive answers, which includes being able to direct ChatGPT to self-check answers (including recursively). Discussion on attribution (which applies to humans as well) is that attribution is pragmatic on the main influences for derived work (top 1-5) vs. every book, paper, article, etc., that was used, read or reviewed. It was also suggested that any LLM should have a set of test suites, including repeatability tests for continually updated datasets. Test results would have to be verifiable by separate approaches, which are essential to verifiability and trust with respect to the data and resulting LLM model. Steven and Savita discussed self-checking and verification Jacob Yunger - how do you direct (what is best practice) to ensure a useful result Neil Thomson - I’m unclear why this technology does not have a clear body of knowledge, provided by the developers, as to how to prepare and control data input/training, plus the “syntax”, structure and configuration controls that are compatible with the internal design. I find it odd that people expect success from using trial/error to reverse engineer how to get useful, trustable answers. At least one lawsuit claims a violation of “fair use” (including Game of Thrones author RR. Martin). Question: would the objection(s) be dropped if GenAI attributed the source of derivative work to those author’s works? General consensus - to be useful/trustable, GenAI needs to emulate academic researchers in providing citations to data and answers (and the data they are based on) to support verification through alternate data sources/processing models. LLM training means that, in most cases, the data used is not current. ChatGPT models currently offered include data training from Sep 2021 Dan Backenheimer - With the exception of direct quotes (which are permitted copying with attribution), if a GenAI model/engine output is to be copyrightable, then it has to be demonstrably a derived work of one/multiple sources | |

| 5 mins |

| Chairs |

Not addressed in this meeting |

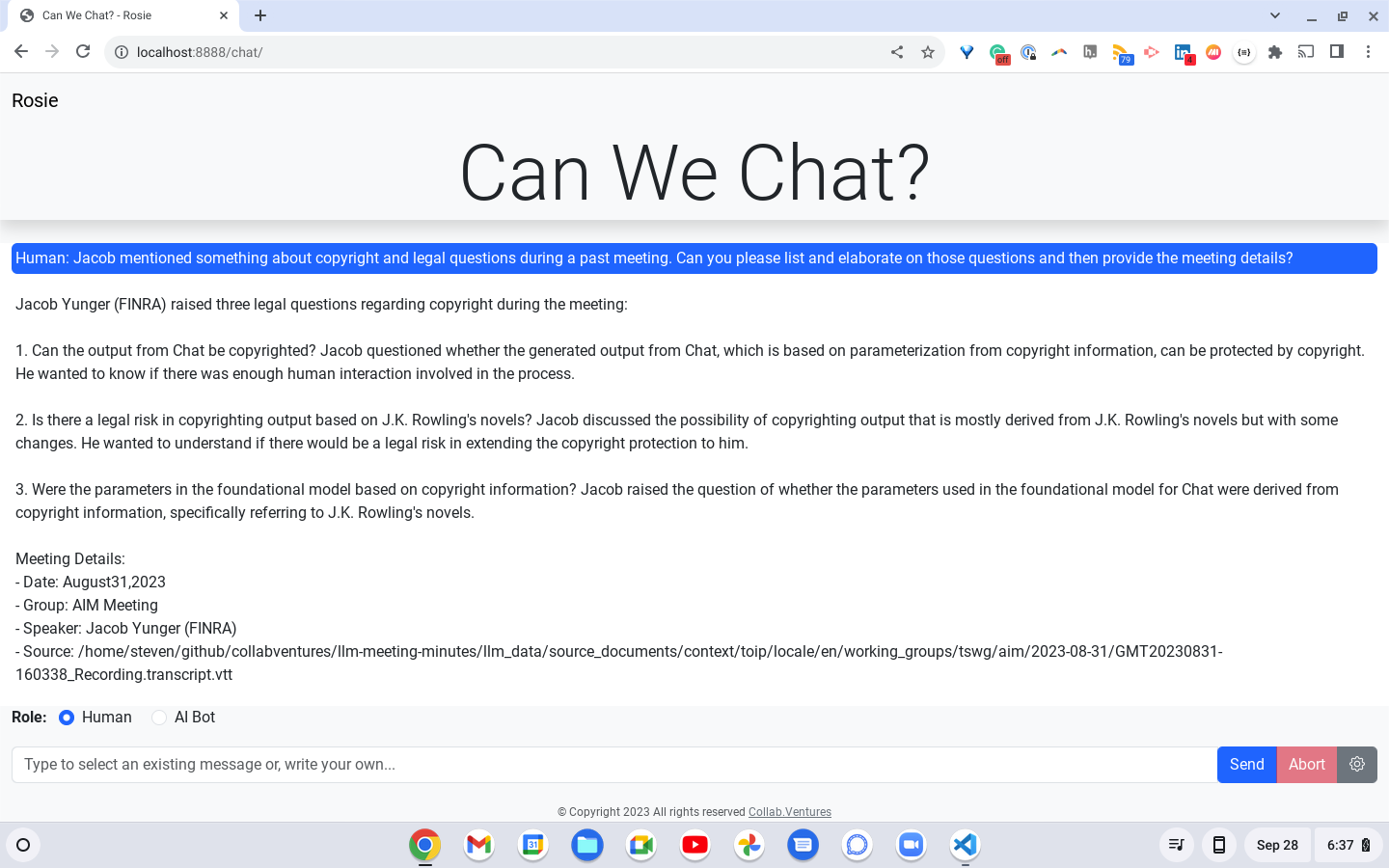

Screenshot example of trained AI searching across multiple meetings and providing metadata with its response.