10th February, 2022

Speaker Bio

Lisa is a Digital Sociologist: Ministry of Justice Digital (UK Government), and an Associate Lecturer: Goldsmiths, Cardiff University, University of Plymouth (UK). For more than a decade, Lisa has researched and written about the relationship between technology, information and society for a diverse range of organisations across public, private and third sector. In addition to her role at the Ministry of Justice in the UK, Lisa is an Associate Lecturer at Goldsmiths, Plymouth and Cardiff Universities. In 2020, the British Interactive Media Association (BIMA) named her as one of Britain’s 100 people who are positively shaping the British digital industry in the category Champion for Change. She is a member of the AI Council for BIMA, Co-Chair of Sovrin Foundation’s Guardianship working group and previously a taskforce member for the All-Party Parliamentary Group for Blockchain Cities. Her talk, Technology is not a product, it's a system, is available for viewing on TED.com

Video

Intro & Summary

The social, economic and political impact of technology over the last decade has resulted in a number of cascading consequences that have been devastating at worst and cringe-worthy at best. While ethical principles and frameworks have been helpful, many practitioners have found them really difficult to 'action' in order to improve design outcomes and the overall human experience. This talk takes aim at confusion and uncertainty by providing practical guidance and examples of how to implement ethics in the creation and design of technology.

Ethics doesn’t have to be complex, feared or shunned to the side. Innovation is oftentimes driven by technology and overlooking the human aspects of agency, perceived safety and inclusivity. In our first Expert Series, Ms Lisa Talia Moretti takes us on a journey of discovery why #ethics should be an agenda item pre-mortem rather than an after-thought. Infused with insights and practical examples from UK’s Ministry of Justice digital journey around Lasting Power of Attorney (LPA), it surfaces the rituals and interconnected systems that play a role in the ethical consideration of all stakeholder groups and their empowerment.

Lisa is a Digital Sociologist, working at the intersection of service design and user research design. She has a degree in Digital Sociology from the University of London and has 15+ years experience that began with user experience (UX) research, moved through marketing and focused on digital products to surface ways that nourish society by studying what emerges at the interface of computing/data/technology, media, social research and social life.

It is perhaps easier to explain ethics by listing a few things it is not:

ethics is not about following the law. There are many things which legal as may be are not necessarily ethical.

ethics is not a religion

ethics is not a culturally accepted norm

ethics is not science

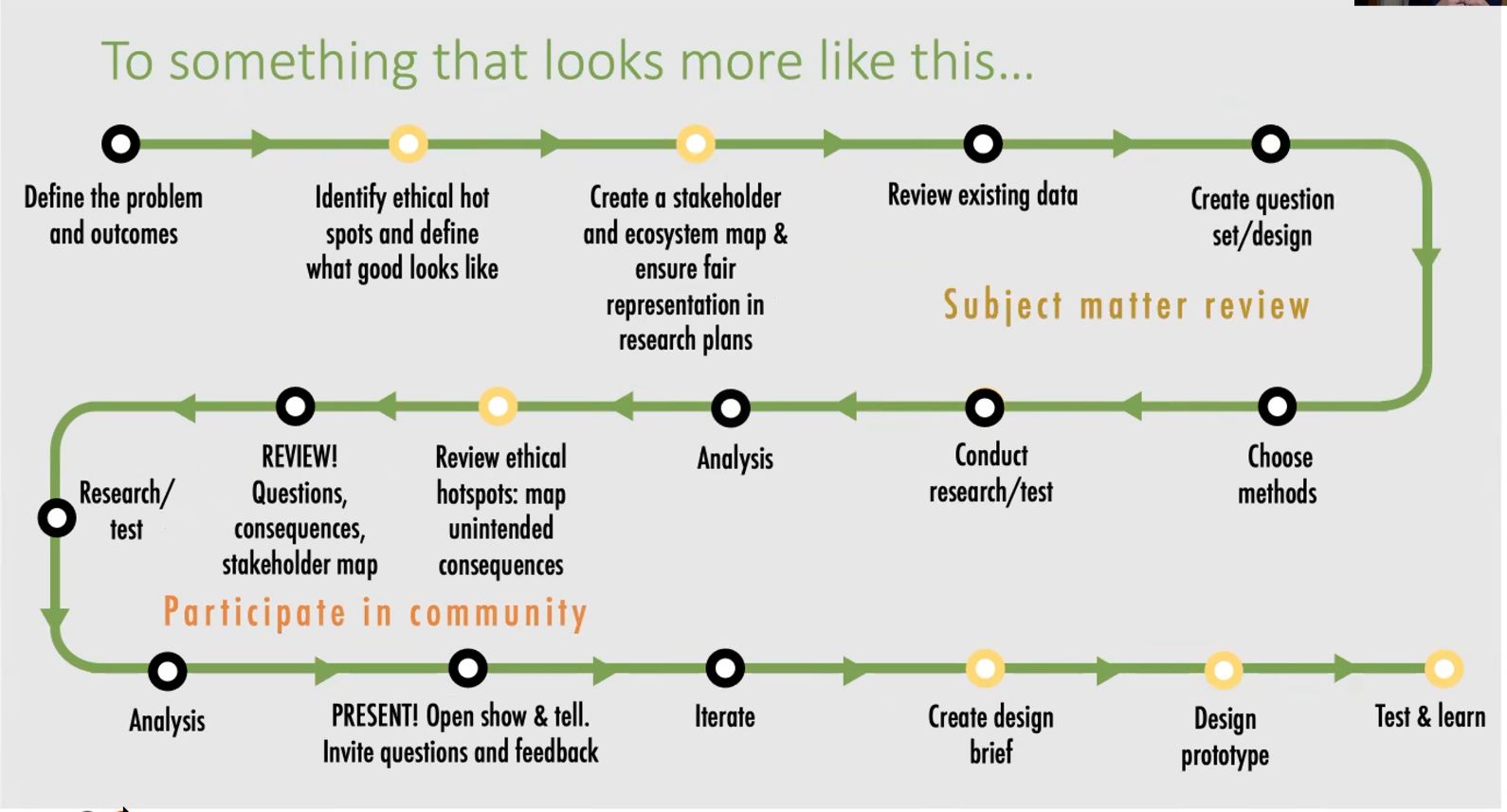

Moving from theory to practice begins with simple steps.

“You do not need a degree in philosophy but an open mind, analytical and critical thinking, and active listening. This will allow you to take on different perspectives, which is the core part”

Technology and by extension, data, are increasingly shaping our world and what humans perceive to be good, bad and what it means to live a good life. Increasingly then, we need to understand what impact technology has, and that imposing technology on society without understanding the implications is counter-productive.

Technology is not a product, IT software but a system integrated with other systems in which we live our lives. As technology disrupts social systems, it disrupts our lives.

There is no ethics drive-thru

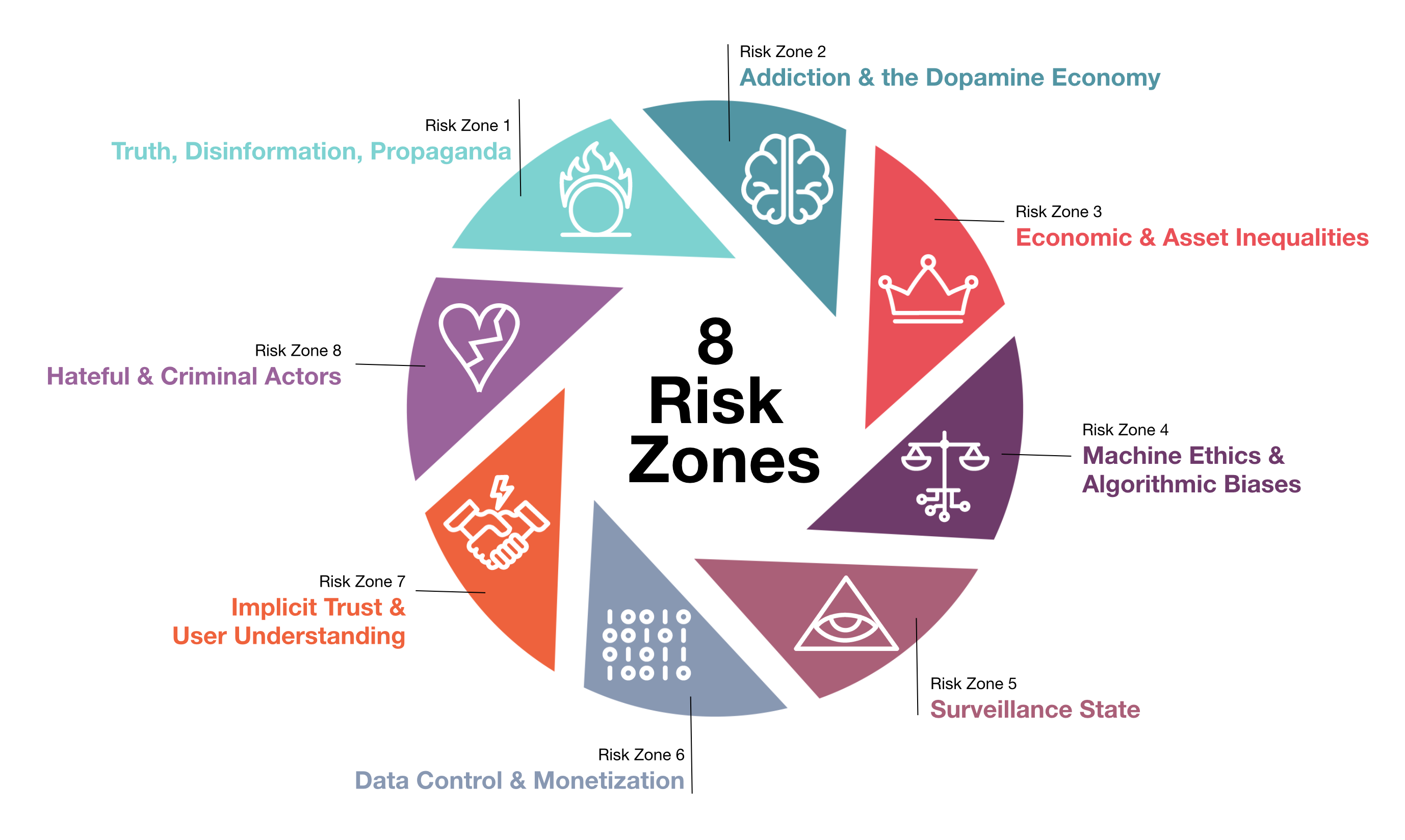

Ethical issues are defined as:

when we think a decision or situation could negatively impact an individual or group.

when we are faced with choice between two ‘good’ options or two ‘bad’ options

when we are faced with a ‘good’ option or a ‘bad’ option that both come with their unique set of negative outcomes

To navigate this we should chart a starting point by defining “what good looks like”. One example is how the MLPA team defined what good digital ID practice would look like [link-missing]:

Be inclusive

Uphold consent

Ensure privacy by default

Design for the many not the few

Foster trust

Implement and sustain accountable governance strustures

In mapping the systems involved in the Lasting Power of Attorney (LPA), we started by acknowledging technology as facilitating the transactions systems have with one another: the justice system, the information system, the healthcare system, the financial system, social networks and systems. Surfacing the things that could go wrong and go right helps us focus and amplify the behaviours we wish to incentivise.

Source:

Questions we might ask during our journey are:

What are we optimising for? Why?

What actions do we need to take to... (live a good life, run a good government, design a good service)?

more questions can be found here: []

Tips on preparing the journey:

Allow enough time to think - better project pre-mortem is key!

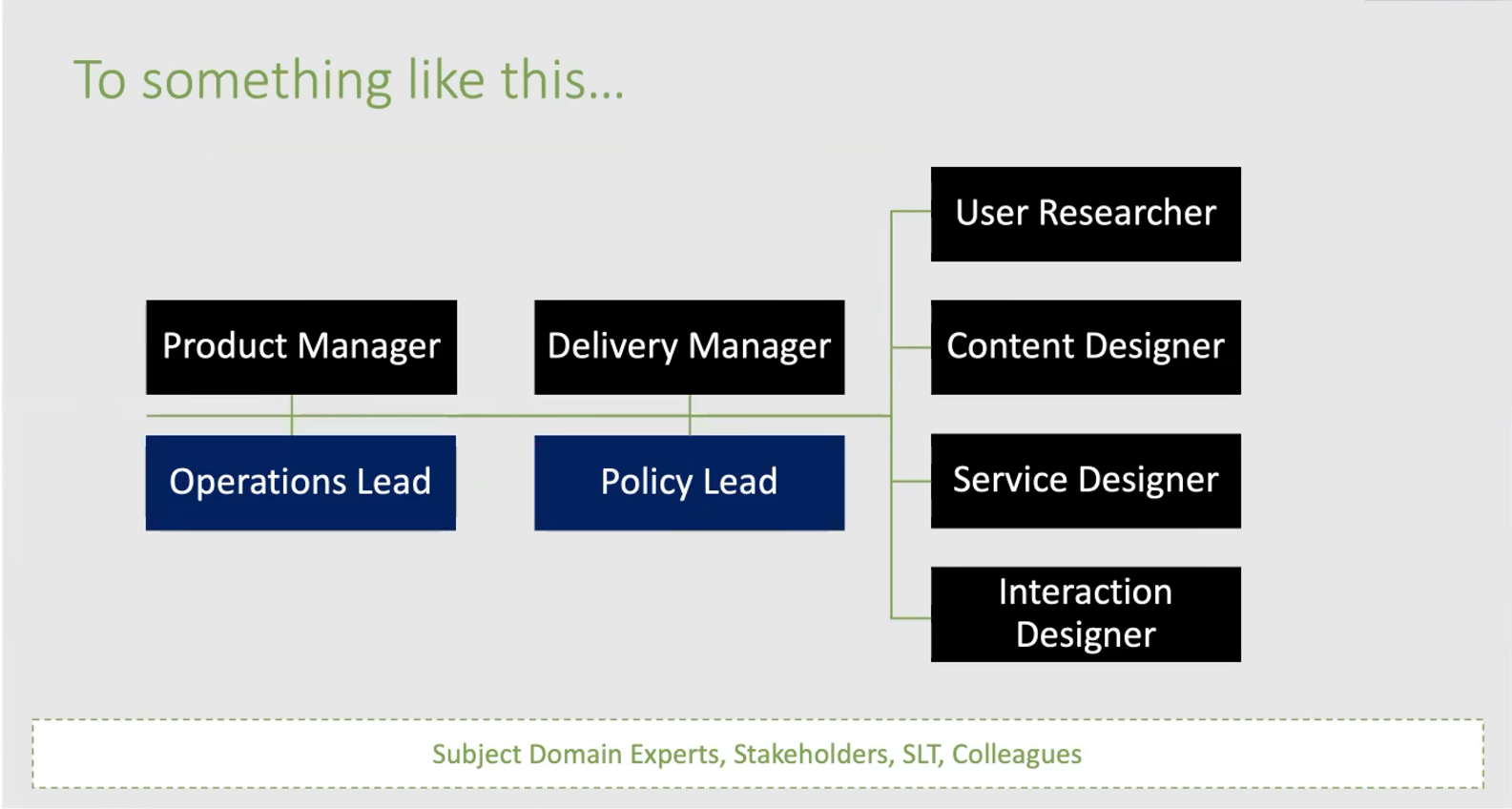

Build a diverse socio-technical team that including technical, design, data, ops backgrounds

Create psychological safety

Distribute power equally

Develop a project language with shared meaning, e.g. fairness, safeguards vs safeguarding, acronyms and items which may be interpreted subjectively

Document the process and educate the system

Invite regular show&tell

Pick a framework that helps: e.g. Edward de Bono’s

Start small and then build additional practices over time

Goal would be to expose all team members equally to the stakeholders, experts, decision makers and colleagues.

Links and Resources

[]